The year was 1992, and Allen Newell died on a summer day in Pittsburgh. The patriarch of symbolic AI, co-creator of the first artificial intelligence program, founder of an entire way of thinking about minds as symbol processors — thgone. The first president of AAAI, the man who'd coined the term "knowledge level" to describe how intelligence operated above the machinery of neurons or silicon — silent.

At The Computer Museum in Boston, the first Loebner Prize had just concluded the previous November.1 A contest to determine which machine could best pass for human in conversation. The contestants had names like "Whimsical Conversation," "PC Therapist III," and "The Cop and The Psychiatrist." None of them won the gold medal. None of them convinced the judges they were human. But for the first time, humans had gathered to formally evaluate whether machines could think, or at least appear to think, in the way Turing had imagined four decades earlier.2

In labs across America, a renaissance was taking shape. Researchers like Minton3, Selman4, Koutsoupias, and Papadimitriou5 were publishing papers on local search algorithms – approaches that didn't attempt to reason their way systematically to solutions but instead explored possibilities, sometimes randomly, sometimes guided by heuristics. These "New Age algorithms"6 combined randomness, parallelism, and pragmatic heuristics. They weren't elegant. They weren't symbolic. But they worked. Meanwhile, Boser, Guyon, and Vapnik took a different path, introducing Support Vector Machines and the kernel trick — an approach that was elegant, symbolic, mathematically rigorous, and equally revolutionary.7

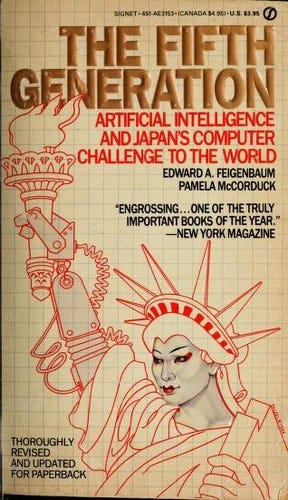

In Japan, officials were quietly closing the books on the Fifth Generation Computer Project. Ten years and $400 million spent pursuing the dream of intelligent computers powered by massive parallel processing and logic programming. The project that had once terrified American policymakers enough to launch the Microelectronics and Computer Technology Corporation (MCC) and DARPA’s Strategic Computing Initiative was ending not with a bang but with a technical report.

In Texas, a small game development company called id Software was putting the finishing touches on Wolfenstein 3D. Players would navigate three-dimensional corridors from a first-person perspective, shooting Nazi guards and collecting treasure. The world’s first first-person shooting game was born. The blood was pixelated, the Nazis cartoonish, but the perspective was revolutionary – a step toward virtual worlds where artificial characters would eventually act with increasing sophistication.

In London, financier George Soros was betting against the British pound,8 while in China, Deng Xiaoping was restarting reform after his "Southern Tour," declaring: "To get rich is glorious." These tectonic economic shifts would reshape global power in ways that would eventually determine who controlled the resources — human, financial, and computational – that powered AI development.

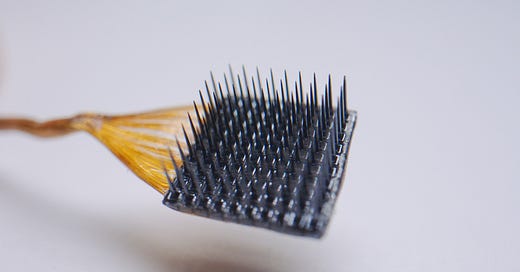

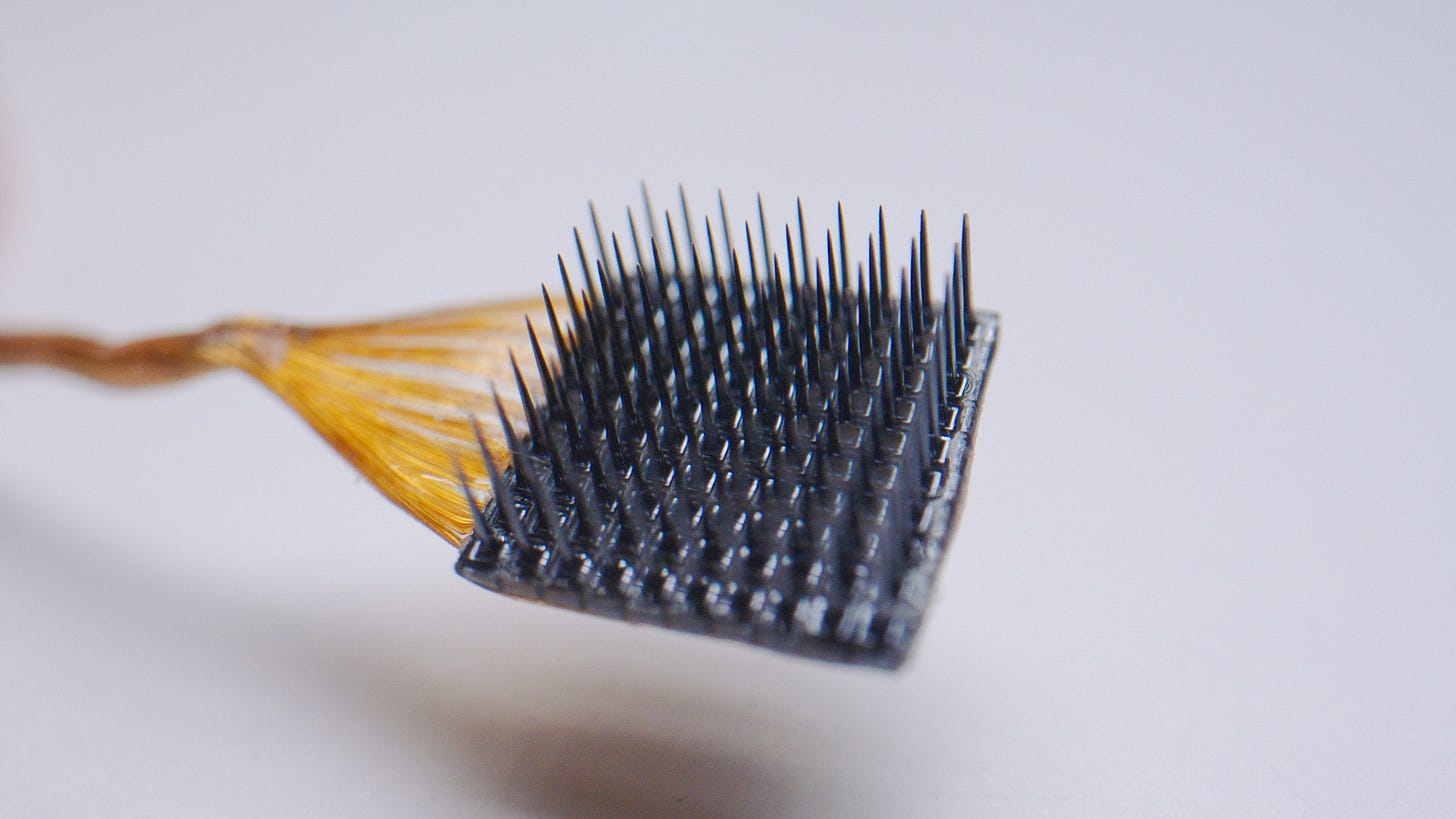

In Utah, bioengineers were developing a microelectrode array that would eventually be known as the Utah Array – a tiny bed of silicon needles that could be implanted in living neural tissue to record the firing of dozens of neurons simultaneously. In 1992, it was merely a clever piece of engineering. Few could have imagined its descendants would someday allow humans to control prosthetic limbs with their thoughts or enable machines to listen directly to living brains.

In Geneva, CERN was releasing the protocols for something called the World Wide Web to the public domain, freely available for anyone to use. The same year Wolfenstein's virtual corridors were being navigated by gamers, Tim Berners-Lee was building corridors of hyperlinks that would eventually connect billions of humans and machines in a global network of information exchange.

In Maastricht, European leaders signed a treaty establishing the European Union, while in Moscow and Washington, Bush and Yeltsin formally declared an end to the Cold War. The systems of geopolitical conflict that had originally funded computing and AI research were transforming just as dramatically as the AI approaches themselves.

In Los Angeles, riots erupted after the acquittal of police officers in the beating of Rodney King.9 In Bosnia, war was breaking out following the republic's declaration of independence from Yugoslavia.

And in South Africa, white voters approved dismantling apartheid, setting the stage for the nation's first multiracial elections.

While these human dramas played out on the world stage, in scientific labs, algorithms were being born that would eventually power search engines, logistics systems, and game AI. Computer scientists weren't just theorizing anymore – they were building tools that worked, even if they couldn't fully explain why.

As 1992 drew to a close, Allen Newell's colleagues prepared a special issue of AI Magazine to honor his memory. But perhaps the most fitting monument to Newell's legacy wasn't found in eulogies or academic tributes. It was evident in the way AI was evolving – becoming more practical, more diverse in its approaches, more willing to embrace methods that worked rather than those that merely seemed elegant.

And in a small lab in Utah, those tiny silicon needles less than 1.5 millimeters long were being arranged in perfect arrays, each capable of recording the whispers of neurons. The Utah Array couldn't think. It couldn't reason. It couldn't speak. But it could listen to the very cells that made thought possible – creating a bridge between carbon and silicon that neither Turing nor Newell could have fully imagined. The needle tips were precisely sharpened to penetrate neural tissue with minimal damage, recording the electrical signals of individual neurons firing.

In 1992, it was just another scientific tool. But in those tiny silicon spikes lay the seeds of futures where the boundaries between minds and machines, between intelligence natural and artificial, would blur in ways that even the pioneers of AI hadn't dared to dream.10

Two From Today

Both articles below explore the unintended consequences of technology in education—one through the lens of AI's impact on individual motivation and character, and the other through the pandemic-era rush to digital classrooms. Taken together, they prompt us to reconsider not only how we deploy technology in schools, but why—and invite a deeper reflection on what education truly means in an increasingly digital world. The first one examines our personal agency in the AI era, and another investigates the educational impacts of our growing dependence on screens.

First, from

of :“AI might not make us smarter, but it reveals who we already are.” An essay reflecting on Professor Lakshya Jain’s observations at Berkeley, where ChatGPT coincided with declining student performance. Are AI tools turning students into lazy learners, or are they simply amplifying existing attitudes toward education? Perhaps technology is neither savior nor destroyer, but a mirror of personal character, revealing our strengths—and weaknesses.

Second, from

and of :“Was the pandemic-era shift to digital learning an educational disaster?”

UNESCO’s Mark West argues that the widespread push toward screen-based learning during COVID-19 caused measurable harm to students worldwide, leading not only to lower test scores and growing inequalities but also increased dropout rates, digital addiction, and unprecedented levels of student distraction. His extensive research reveals how an over-reliance on ed-tech inadvertently accelerated privatization of education, enabled invasive surveillance, and profoundly undermined holistic student well-being, prompting an urgent reconsideration of technology’s role in schools.

Can Machines Think? Humans Match Wits, The New York Times, November, 1991 (gift)

A New Method for Solving Hard Satisfiability Problems, Selman et al

On the greedy algorithm for satisfiability, Koutsoupias and Papadimitriou

A training algorithm for optimal margin classifiers, Guyon, Boser, & Vapnik

How George Soros forced the UK to devalue the pound, Planet Money, NPR (listen)