The year was 1987, and on an October morning in Lower Manhattan, two men watched their computer screens as exponential curves predicted the end of an era.

"We were tracking exponential moves in the equity market," Peter Borish would later recall. "The main one was the equity move in the 1920s, and the market in 1987 looked almost identical." Borish and his boss Paul Tudor Jones had spent weeks comparing charts, overlaying 1987's market patterns with those that preceded the 1929 crash. The resemblance was uncanny, and terrifying.1

But while Tudor's team was reading patterns in market data, blocks away at Digital Equipment Corporation’s (DEC) New York offices—one of many offices across the country for what was then the world’s second-largest computer firm behind IBM—other exponential curves were signaling a different kind of collapse. Renowned for its successful line of minicomputers that fueled rapid growth throughout the 1970s and 1980s, DEC had leaned heavily on its flagship expert system, XCON, once heralded as a triumph of rule-based artificial intelligence. Yet XCON was now drowning in its own complexity: what had started as 750 rules had metastasized to over 6,200. Each new computer configuration demanded more rules, more exceptions, and more specialized handlers to keep the system afloat. Like the formula-based portfolio insurance strategies widely blamed for exacerbating the 1987 market plunge, XCON was discovering the limits of relying on rigid rules to replicate human expertise.

At Tudor's offices, Borish and Jones prepared for chaos. The pattern matching that guided their trading was fluid, adaptive — less like XCON's brittle rules and more like the neural networks that a small but growing group of AI researchers were beginning to explore in more depth. "That Monday morning was probably the greatest demonstration of trading skill that I have ever seen in my life," Borish would later say. "Even though we had this model saying Monday was the day, Paul was willing to add to the position."

As dawn broke over Wall Street, few understood that they were witnessing what would become the most stark example of rule-based systems failing in a complex world. But the stock market wasn't alone in facing this reality check in 1987. Throughout the year, the artificial intelligence community had been grappling with its own systemic challenges. Symbolics and other Lisp machine manufacturers had begun their decline months earlier. Their specialized workstations, built for the rule-based AI systems of the early 1980s, were being displaced by cheaper, more flexible UNIX machines. Plus, DARPA's funding priorities were shifting. The expert systems that had promised to revolutionize business were revealing their limitations. Not because of the crash, but because of their own inherent brittleness when faced with real-world complexity.

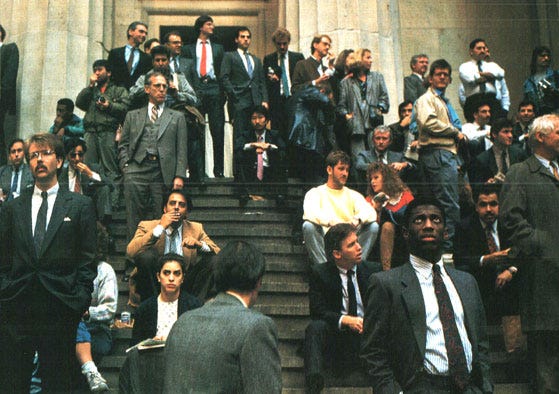

By the end of that October morning, as the Dow plunged 508 points, the market's automated trading systems would dramatically demonstrate the perils of rigid rule-following that AI researchers had been quietly confronting all year. While regulators on Wall Street responded with “circuit breakers”—automatic trading halts triggered by steep market swings on exchanges like the New York Stock Exchange—to contain panic selling, the AI field was already undergoing a deeper shift: moving away from rigid, rule-based expert systems and embracing connectionist methods that could learn and adapt.

Down on the 40th floor of One New York Plaza, Michael Lewis wandered through what felt like a time capsule. The equity trading floor was everything the "cathedral of bonds" upstairs was not - cramped, dark, chaotic. "A lot of guys who were named Vinny and Tommy and Donny," as Lewis would later recall. "They'd been around forever, and they had Brylcreem in their hair and big guts and they smoked too much and they were lovable."

These traders, with their gut instincts and human messiness, were about to collide with the cold logic of portfolio insurance - computer programs designed to automatically hedge stock positions by selling futures contracts. Like XCON's rules for configuring computers or expert systems' attempts to capture human knowledge, portfolio insurance represented a faith that complex human decisions could be reduced to mathematical formulas.

"There was red everywhere," Paul Tudor Jones would remember, "and all I could think about was how cornered the portfolio insurers were." The programs, following their predefined rules, began selling futures to hedge against losses. But as prices fell, they triggered more selling, creating a feedback loop that human traders might have recognized as dangerous but the machines faithfully executed.

=At Salomon Brothers, Lewis found himself unexpectedly fascinated by the scene unfolding on the equity floor. "It was the first time in my career at Salomon Brothers where I was actually interested in standing beside the equity department and watching these people do their job." He was witnessing something profound - the moment when theory met reality, when elegant mathematical models crashed against the messy complexity of human panic.

As traders on the equity floor grappled with the market’s unraveling, another version of Wall Street was about to hit theaters. Oliver Stone’s Wall Street premiered that December, its slick depiction of high-flying finance arriving just as the real-world consequences of financial excess had become all too clear. Gordon Gekko’s now-iconic declaration— “Greed is good”—would come to symbolize an era that had, by Black Monday, already begun to devour itself.

The contrast between the two floors at Salomon Brothers — the pristine logic of the bond trading cathedral and the raw humanity of the equity floor — echoed a deeper divide playing out across both Wall Street and the growing world of computational intelligence. Upstairs sat the true believers in mathematical models and rational systems. Downstairs were the “Vinnys and Tommys,” the human traders whose intuition and adaptability would prove crucial as rigid systems began to fail.

The lesson wasn't lost on XCON's creators at DEC, who had spent years adding rule after rule to handle edge cases their initial models hadn't contemplated. Like the portfolio insurance programs spiraling into a feedback loop of selling, XCON had reached a point where its very complexity had become its greatest weakness. The dream of encoding human expertise into rules was revealing its limitations on multiple fronts.

As computer screens across Wall Street flickered with falling numbers, half a world away in Beijing, other numbers were rising. China's economic reforms under Deng Xiaoping had begun showing remarkable results — GDP growth, foreign investment, industrial output. But like the expert systems struggling with real-world complexity in Western labs, China's leaders were discovering that pure rules, whether capitalist or communist, rarely survived contact with human reality.

In those accelerating markets of late 1987, multiple forms of rigidity were being tested simultaneously. On Wall Street, portfolio insurance programs mindlessly executed their sell orders, creating what Paul Tudor Jones called "the Acapulco cliff dive." In Beijing, party officials mechanically applied ideology to a rapidly evolving economy, even as students and reformers pushed for greater flexibility.

The friends and counterparties I was speaking with were gripped with complete fear," Jones would later recall. Fear was indeed the one emotion that rule-based systems, whether financial algorithms or expert systems, couldn't properly process. By mid-morning, as the Dow plunged and phones went unanswered across trading floors, that human element was asserting itself forcefully.

The day would end with the Dow down 508 points - the largest one-day percentage drop in history. But the real lesson wasn't in the numbers. Just months earlier, Ronald Reagan had stood at the Brandenburg Gate in Berlin, challenging Gorbachev to "tear down this wall." Like the market crash, the decline of rule-based AI, and Lisp Machines, Reagan's speech suggested a fundamental truth: rigid systems, whether walls or algorithms, eventually face moments when they must either adapt or collapse.

Whether in finance, artificial intelligence, or governance, systems built on inflexible rules inevitably face moments when those rules no longer suffice. By year’s end, those adaptations were already beginning: circuit breakers for the markets, neural networks for AI, and across the world, the first cracks in the walls that divided East from West. The true test wasn’t in preventing such moments, but in how systems and their human overseers adapt when they arrive.

Two From Today

First from

’sA growing body of research suggests that heavy reliance on AI weakens critical thinking—a problem not unlike the automation-induced skill decay seen in pilots and traders decades ago. A new study from Microsoft found that knowledge workers using AI engage less deeply with their work, outsourcing cognitive effort in ways that could lead to long-term intellectual atrophy.

But it’s not all bad news. The research also hints at a way to use AI without sacrificing brainpower—leveraging it to “chunk” information at higher levels rather than offloading thinking altogether.

If you’re worried about what AI is doing to your ability to think—or to the next generation’s ability to think at all—this is worth a read.

But, don’t worry about AI making us dumber, apparently Meta is already working on skipping the thinking part altogether apparently.

“Meta has unveiled a groundbreaking AI system capable of converting thoughts into typed text with up to 80% accuracy, but the technology currently requires a massive, non-portable brain scanner and controlled laboratory conditions to function effectively.” From Meta’s own blog:

Using AI to decode language from the brain and advance our understanding of human communication

Links to the published papers and more details are at the bottom of the post.

And here’s more reporting on it from MIT Technology Review:

Meta has an AI for brain typing, but it’s stuck in the lab

“Now the company, since renamed Meta, has actually done it. Except it weighs a half a ton, costs $2 million, and won’t ever leave the lab.

Still, it’s pretty cool that neuroscience and AI researchers working for Meta have managed to analyze people’s brains as they type and determine what keys they are pressing, just from their thoughts.”

Is it?

The Crash of ’87, From the Wall Street Players Who Lived It - Bloomberg (gift)