This week’s essay was delayed from this past Sunday to today, Thursday. Next week’s essay on the year 1987 will still be published this Sunday evening.

This week’s essay also happens to mark the half-way point in our journey A Short Distance Ahead. It is a bit uncanny that this year, 1986 — best captured in the publishings and work of Geoffrey Hinton from this two to three year time period— crosses a sort of research threshold that would come to define the modern forms of machine learning and artificial intelligence that we now interact with the most.

The year was 1986, and America was trying to solve its hunger crisis by holding hands.1 More than 35 million Americans were living below the poverty line, including nearly 13 million children — roughly one in five — who regularly went to bed hungry.

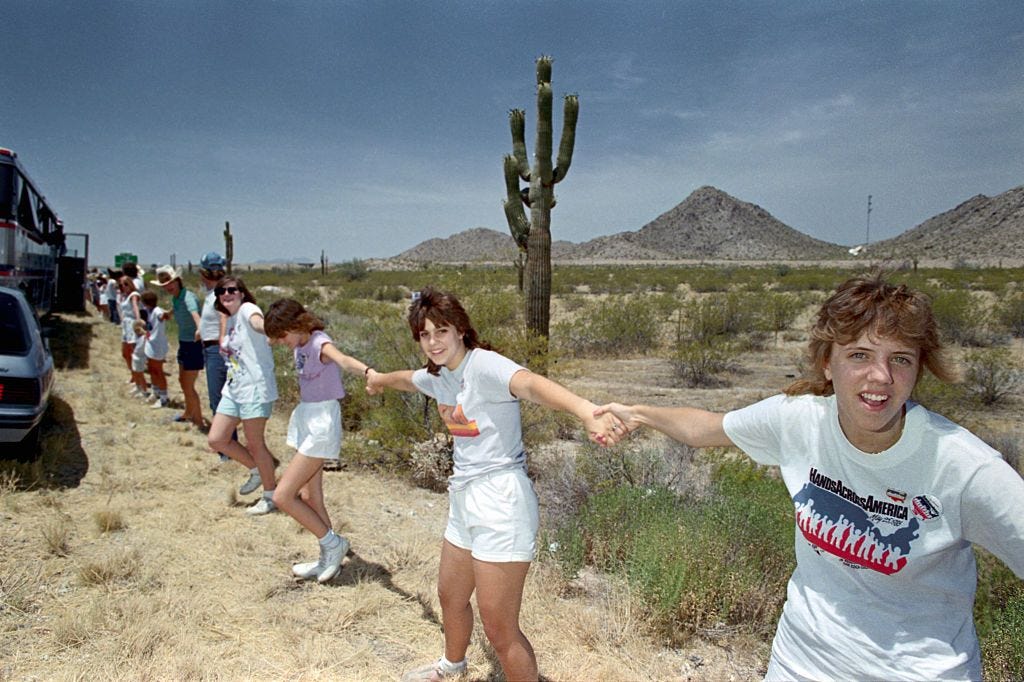

On Memorial Day weekend, over six million people stretched across the continent in an unbroken human chain called Hands Across America. The goal was simple yet ambitious: if enough people could physically connect, perhaps they could bridge the growing chasm between abundance and need in America. The effort mobilized 6.5 million people to join hands in an attempted 4,152-mile human chain — a triumph of social coordination — but ultimately the effort raised just $15 million after expenses, less than a dollar per hungry American — a pittance compared to the scale of the problem it meant to address.

Meanwhile, working across computer science labs at Carnegie Mellon and UCSD, three researchers were about to publish a paper, Learning Representations by Back-propagating Errors, that would forever transform how machines learn. Geoffrey Hinton, David Rumelhart, and Ronald Williams had discovered a way for artificial neural networks to learn from their mistakes. Their method, called backpropagation, allowed networks of simple artificial neurons to gradually adjust their connections through trial and error. Like a student learning from mistakes, the network could check its answers against correct ones and gradually adjust itself to make fewer errors. The mathematics was elegant; the implications were profound.2

In Beijing, Deng Xiaoping was reading a different kind of paper — an urgent letter from four of China's most prominent scientists. They warned that China was falling dangerously behind in high technology, particularly in response to America's "Star Wars" Strategic Defense Initiative. Deng's response was swift and decisive. He would approve what would become known as the 863 Program — a radical experiment that put scientists, not bureaucrats, in charge of China's technological development.

Three attempts to solve complex problems through new kinds of learning — one through the literal joining of hands across a continent, another through the mathematics of artificial neurons adjusting their connections, and a third through reimagining how a nation's bureaucracy might learn to innovate. Each would face its own form of resistance.

The gaps in the human chain across America proved difficult to ignore. Despite meticulous planning, the line dissolved into disconnected segments across the desert Southwest. Some participants in sparsely populated areas stood miles apart, holding their position for the designated fifteen minutes, connected only in theory. Supposedly, the singer Robert Goulet “was helicoptered to sparsely populated Vicksburg, Arizona, with the resident of a homeless shelter (named Barbara Larsen), to bury a time capsule commemorating the event.”3 The organizers declared victory nonetheless — the spectacle itself deemed more important than the actual connection.

In computer science labs, Hinton's backpropagation was creating its own kind of stir. The idea that machines could learn through trial and error, much like humans, challenged the dominant paradigm of artificial intelligence. Most AI researchers were still focused on programming explicit rules and logic — if-then statements that attempted to codify human knowledge. Backpropagation suggested a different path: let the machines discover their own rules through experience. But the computers of 1986 struggled to handle even simple neural networks. The mathematics worked on paper, but the hardware wasn't ready.

Meanwhile, the 863 Program was racing against its own constraints. Chinese scientists, finally free from bureaucratic oversight, outlined ambitious goals in seven key areas: biotechnology, space, information, lasers, automation, energy, and new materials. They created direct funding channels that bypassed traditional hierarchies. Merit, not political connection, would determine which projects received support. But already, by year's end, the first signs of bureaucratic resistance were emerging. Old habits of control died hard.

Each of these milestones revealed something about the gap between ambition and reality. The funds raised by Hands Across America amounted to symbolic relief rather than structural change. Hinton's networks, though mathematically sound, remained largely theoretical as researchers waited for computers powerful enough to implement them. And in China, the 863 Program's attempt to separate scientific merit from political control was proving more complex than its architects had imagined.

Yet in the seeming limitations of each effort lay the seeds of something more profound. Perhaps some problems required more than simply joining hands, or discovering clever algorithms, or rearranging bureaucratic hierarchies. Perhaps real learning — whether by machines, institutions, or societies — was messier than anyone was capable of imagining in 1986.

The winter of that year arrived with a strange kind of promise. In offices across America, publicity photos from Hands Across America were being filed away in drawers, the aerial shots looking like neural networks themselves — lines of human connection stretched across the continent, some strong, some weak, some broken entirely. At Carnegie Mellon, Hinton and his colleagues were beginning to receive the first responses to their paper, many skeptical of whether machines could really learn this way. And in Beijing, as the first project proposals for the 863 Program crossed newly created desks, scientists and bureaucrats were learning to speak each other's languages.

In many ways, the year 1986 was like a neural network in its early training epochs, each of these milestones moved blindly toward an unknown optimum, some paths strengthening while others withered away, while the arrival of another AI Winter was just A Short Distance Ahead.

Two From Today

This week’s newsletter is coming out today, rather than last Sunday night, because there were some more exciting things happening here in Philadelphia. But, as far as the much hyped AI Super Bowl commercials went, apparently:

Super Bowl Ad Results: AI Can’t Compete With Sentimental Stories (gift)

From The Wall Street Journal: “A theme going into the night was what role, if any, would AI play. And I think we saw that, you know, at least this year, AI pretty much fell flat,” said USA Today video journalist Ralphie Aversa.”

“In many cases it suggested that audiences responded better to sentimentality and celebrity than the promise of technology."

OpenAI’s Super Bowl debut, for example, positioned the rise of ChatGPT as a key moment in human history, but critics and viewers seemed ready to forget the spot.

And, from

of :When an AI-generated deepfake of a high school principal went viral, it wasn’t just deception that fueled the outrage—it was the fact that people wanted to believe it. This piece explores why deepfakes are more than just tools of misinformation; they are potent expressions of our biases and desires.

"The power of deepfakes lies not in what they hide but in what they reveal."

“Deepfakes are thought of as a powerful means of deception but that’s the wrong framing. They don’t change minds, they confirm what people already believe. Their real power doesn't lie in how well they can conceal the truth, but in how well they can express a counter-truth.”

“From this perspective, a deepfake is more like art than crime. More like satire than propaganda. It distorts reality to reinforce a narrative.”

"Fake or not, we don’t care. We can't care. The epistemic apocalypse is here, but it’s always been."

I”n a way, deepfakes are the greatest threat not against the easily gullible but the terminally stubborn. The danger isn’t deception or concealment—it’s mass reinforcement of our worst biases. The longer we fail to recognize and accept this, the more destructive they will become.”

Read the full piece here:

Regan Decides to Join Hands Across America - NYTimes ‘86 (gift)