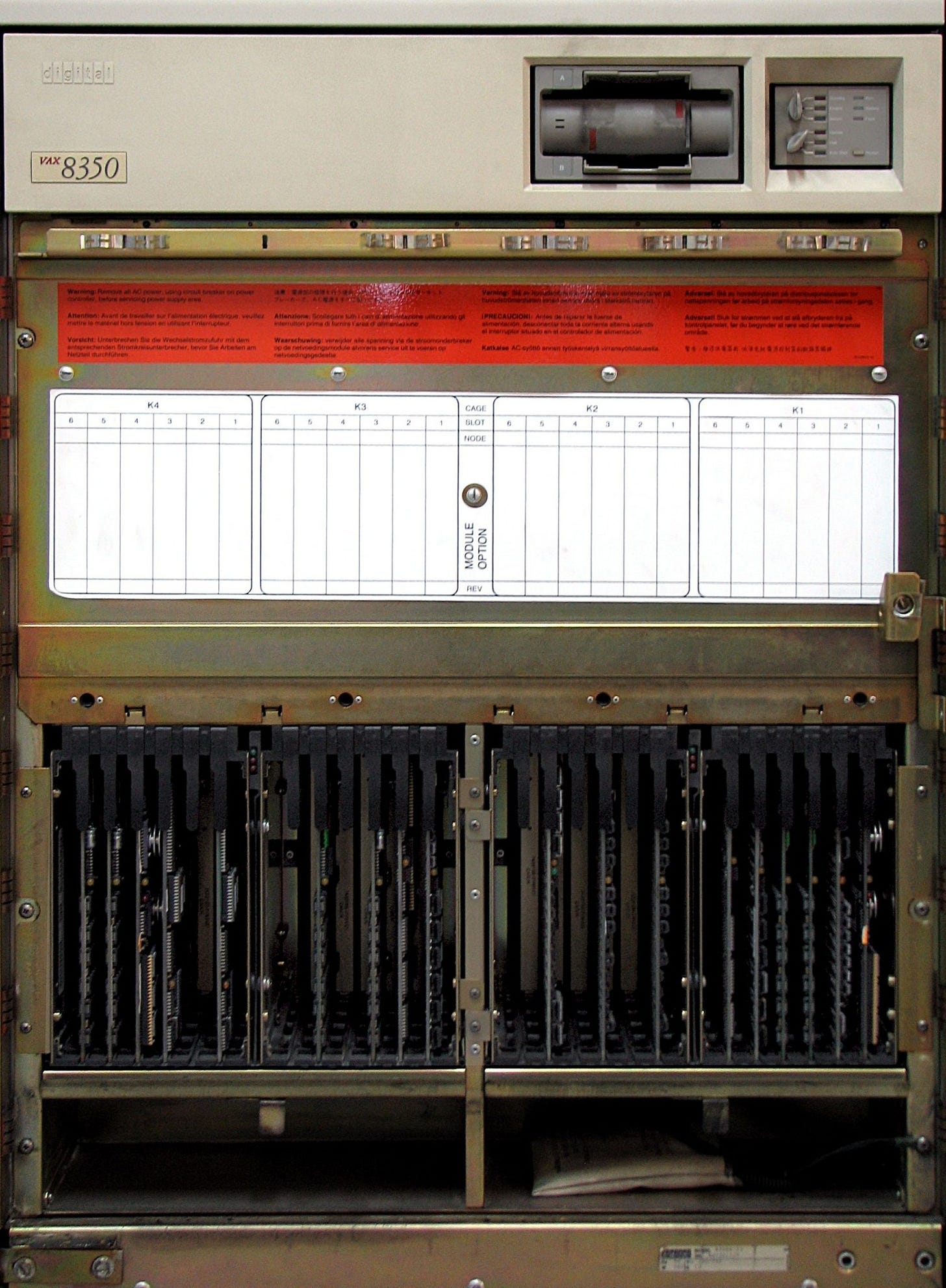

The year was 1985, and artificial intelligence stood at a crossroads between its corporate dreams and academic reality. At Digital Equipment Corporation engineers were wrestling with a deceptively simple problem: how to configure computer systems without human experts.

For a company that had helped birth the field of artificial intelligence by supplying machines to academic labs throughout the 1970s,1 their solution proved surprisingly old-school. XCON (or R1), an expert system built on explicit rules and logic, had grown from 750 rules to over 2,500, becoming what DEC engineer Stephen Polit called "an integral part of the manufacturing process."

The system was a triumph, yes, but perhaps not the kind of triumph they had imagined when they first began supplying machines to AI researchers at MIT, Stanford, and Carnegie Mellon. XCON could configure computer systems, saving DEC millions, but it required constant maintenance, with teams of specially trained personnel updating its ever-growing rulebook to handle new products and combinations. It was less an artificial intelligence than an artificial expert — brilliant within its domain but unable to learn or adapt on its own.

This vision of AI — based on explicitly encoded knowledge and rules — wasn't just DEC's corporate bet. That same year, in his presidential address to the American Association for Artificial Intelligence, Woody Bledsoe2 looked back on 25 years of progress and declared definitively: "Knowledge is king; knowledge—the key to who we are." While acknowledging that the field's grand ambitions had faced setbacks, Bledsoe saw the future in what he called "Knowledge-application machines." Expert systems like XCON seemed to validate this vision.

But even as Bledsoe delivered his address, change was brewing in academic labs across the world. About half of the new crop of graduate students admitted to the Ph.D. program in computer science at the University of Texas had selected AI as their preferred field of study. What Bledsoe couldn't have known was that this new generation would help upend the very paradigm he was celebrating.

In Pittsburgh, Geoffrey Hinton and his colleagues, David Ackley and Terrence Sejnowski, were preparing to publish a paper on "Boltzmann Machines" that would help rekindle interest in neural networks. Boltzmann machines, in this context, were a type of neural network, inspired by how objects in nature find their most stable state — like a marble settling at the bottom of a bowl. Rather than following explicit rules, these neural networks could discover optimal patterns through a process of gradual refinement — learning from experience rather than programmed instructions.

The tension between these approaches — symbolic reasoning versus statistical learning — mirrored larger shifts happening across society. Just as corporations like DEC were discovering the limits of systems based on rigid rules, researchers were exploring a different idea: what if intelligence came from simple parts working together, the way neurons connect in our brains?

The irony was rich: even as DEC and other corporations rushed to embrace expert systems, academia was rediscovering approaches that would eventually make them obsolete. That March, at the Cognitiva conference in Paris, when a young French researcher named Yann LeCun met Hinton over a plate of couscous, few could have predicted their shared vision of neural networks would eventually transform AI. LeCun had written what he self-deprecatingly called "a badly written paper in French," but Hinton saw something in it — and in the young researcher — that resonated with his own quest to make machines learn more like humans.

The Boltzmann Machine that Hinton and his colleagues had developed represented a fundamental shift in thinking about artificial intelligence. Instead of encoding human expertise into explicit rules — like XCON's thousands of configuration instructions — these systems could discover their own internal representations through a process akin to learning. The mathematics behind their work offered proof that intelligence might emerge from simple units working together, rather than from top-down programming.

But this vision faced significant skepticism. The AI winter of the 1970s had left deep scars, particularly around neural networks. Marvin Minsky and Seymour Papert's 1969 book "Perceptrons" had seemingly proven fundamental limitations in what neural networks could accomplish. Even Hinton's own doctoral advisor had advised him against pursuing neural network research. "It's like religion," the advisor warned. "Either you believe in it or you don't."

While DEC was training engineers to maintain their expert systems — teaching them to think like machines — Hinton, LeCun, and others were teaching machines to think more like humans. And as these two visions of artificial intelligence competed in labs and boardrooms, the wider world was grappling with its own questions about complexity and emergence.

That July, Live Aid connected an estimated two billion people across the globe in what seemed like a triumph of technological networking and human compassion. Yet even this moment contained its own contradictions. While the event revolutionized charitable fundraising through mass media and demonstrated technology's power to unite humanity,3 it also perpetuated simplistic narratives about complex problems.4 Much like the AI researchers discovering that intelligence couldn't be reduced to simple rules, the world was learning that global challenges resisted easy solutions.

In the Soviet Union, Mikhail Gorbachev was introducing concepts of "glasnost" (openness) and "perestroika" (restructuring), suggesting that even the most rule-bound systems might need to learn and adapt.5 Like AI researchers questioning centralized rule systems, the Soviet Union under Gorbachev was discovering the limitations of top-down control.

Hinton's paper described how networks of simple units, following local rules of interaction, could give rise to global intelligence — much like neurons in the brain, or like the emerging personal computer revolution that was decentralizing computing power. That same year, the first .com domain name was registered, and Microsoft released the first version of Windows — signs that computing itself was becoming less centralized, more networked, more adaptive.

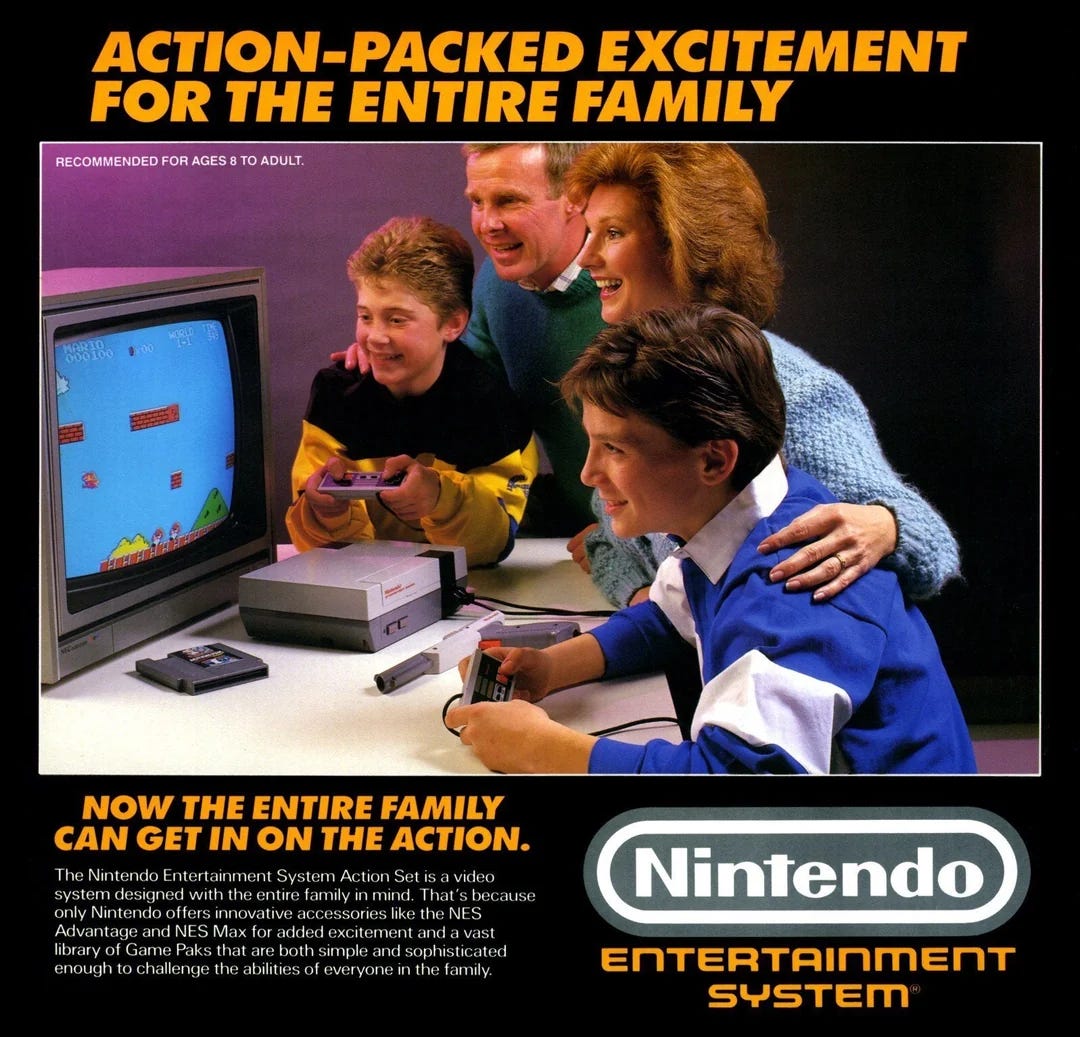

By year's end, as Nintendo launched its Nintendo Entertainment System in America and the first version of the programming language C++ was released, the stage was set for a fundamental transformation in how we thought about both intelligence and computation. The future would belong not to rigid rules but to learning systems, not to centralized control but to emergent behavior.

By the end of 1985, the future of artificial intelligence remained uncertain. DEC's XCON kept expanding its rule base, configuing computers with increasing complexity, while neural networks in academic labs hinted at a paradigm shift yet to come.

What no one fully grasped in 1985 was that both approaches embodied essential truths about intelligence. Symbolic systems, like XCON, proved that expertise could be codified and applied with precision. Meanwhile, neural networks, like Hinton's Boltzmann Machines, suggested that intelligence might emerge not from explicit rules, but from simpler principles of learning and adaptation.

1985 marked the moment AI began to reckon with its own complexity. Like the global connectivity underscored by Live Aid, the political shifts sparked by glasnost, and the personal computing revolution enabled by Windows 1.0, AI was discovering that the most profound transformations often arise not from rigid top-down control, but from the intricate interplay of simpler, independent elements.

Two From Today

The AI-related news this past week was dominated by DeepSeek, an open-source AI model from China that challenges the dominance of U.S. tech giants by offering a cheaper, more decentralized alternative to the massive, expensive AI models currently being built.

Frist in

:DeepSeek Was Inevitable—The Only Question Was Who Would Build It

breaks down why DeepSeek, an open-source AI model from China, was bound to happen. The AI race in the U.S. has been dominated by hyperscalers (Google, Meta, OpenAI/Microsoft, etc.) following a "scale up" strategy—piling massive CapEx into ever-larger models. History suggests that disruptive technologies eventually "scale out" instead, leveraging smaller, more numerous, and cost-efficient alternatives (think: mainframes → PCs, Cray → Intel, etc.).Sinofsky points out how DeepSeek represents this shift. It runs on commodity hardware, operates without hyperscaler infrastructure, and challenges the assumption that only trillion-dollar companies can lead AI innovation. Whether U.S. companies recognize this shift in time remains to be seen.

The lesson according to Sinofsky? AI is on the brink of commoditization. The next game-changer will likely come from a company embracing "scale out," not just throwing more billions at "scale up."

And second, in The Guardian, Kenan Malik writes:

DeepSeek has ripped away AI’s veil of mystique. That’s the real reason the tech bros fear it

Malik argues that DeepSeek’s biggest impact isn’t technological but economic and political. It matches the capabilities of existing AI models like ChatGPT but was built at a fraction of the cost, defying the dominant U.S. “scale-up” approach of massive investment and proprietary control. Ironically, China’s open-source release challenges Silicon Valley’s AI monopoly, revealing that democratization—not just breakthroughs—can be disruptive. While concerns about censorship and surveillance are valid, the deeper fear in the West is that AI leadership might be slipping beyond U.S. control.

Finally, this week I watched PBS’s Frontline’s recent episode:

China, the U.S. & the Rise of Xi Jinping

Like with every Frontline I watch, I came away with a deeper perspective on China and Xi Jinping, and additional context on the China -U.S. relationship. I highly recommend.

The historical dissonance of Woody Bledsoe borrowing Martin Luther King Jr.'s 'I Have a Dream' framing for his 1985 AAAI address was real for those who knew his earlier work. In the 1960s, Bledsoe had helped pioneer facial recognition technology while working on classified projects for the CIA and King was one of the most heavily surveilled Americans of his time. Now in 1985, just as Congress was preparing to make King's birthday a federal holiday, Bledsoe was co-opting the civil rights leader's rhetorical framework to champion an AI future where 'Knowledge is king' - though what kind of knowledge, and who would control it, remained unspoken questions. More on Bledsoe here: The Secret History of Facial Recognition - Wired