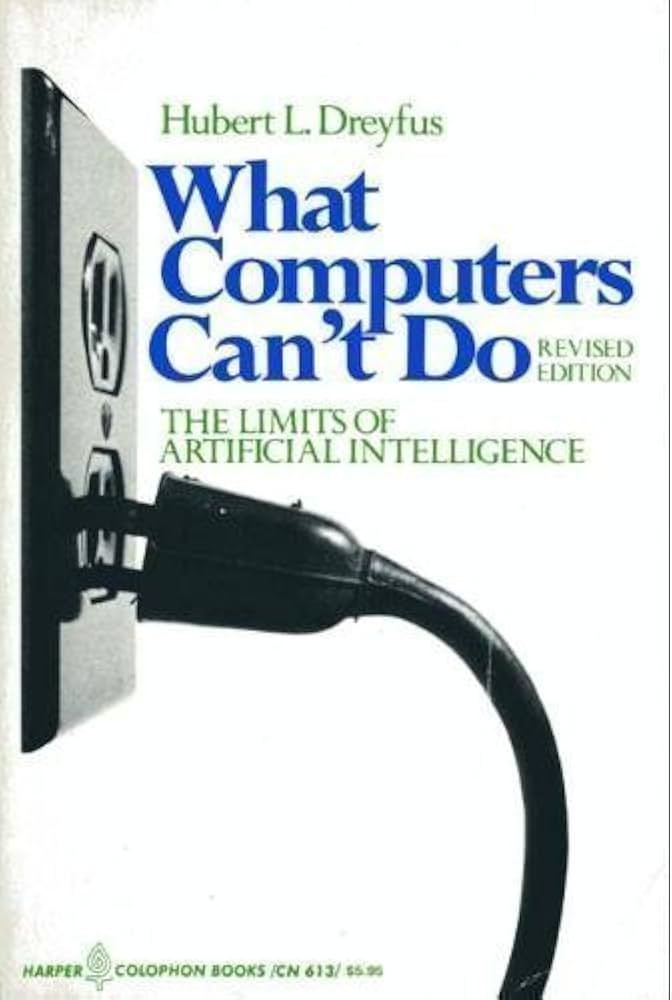

The year was 1972, and in his Berkeley office, Hubert Dreyfus was putting the finishing touches on a book that would shake the foundations of artificial intelligence. Through his window, San Francisco Bay sparkled in the distance, while closer to home, students ambled across campus, their conversations drifting up - Vietnam, Watergate, environmental protests. But Dreyfus's mind was elsewhere, focused on what machines couldn't do.1

Seven years earlier, his RAND paper comparing artificial intelligence to alchemy had caused such an uproar that the think tank tried to suppress it. Instead, it became an underground sensation, passing hand to hand among researchers from Moscow to Tokyo. Now, expanding that critique into a full book, Dreyfus was certain: computers would never truly think, never understand, never be conscious.

Across the bay in Sunnyvale, a young engineer named Nolan Bushnell was watching teenagers crowd around a peculiar contraption. On a black and white television screen, a simple white dot bounced back and forth while players twisted knobs to intercept it with rectangular "paddles." It wasn't thinking, it wasn't understanding, it wasn't conscious. But it was doing something neither Dreyfus nor the AI researchers had anticipated - it was playing.

The gap between what philosophers thought computers couldn't do and what they were beginning to do in unexpected ways would define 1972, a year when technology slipped past the boundaries humans had drawn for it, not by matching our intelligence, but by creating entirely new forms of human experience.

Meanwhile, in Marseille, France, Alain Colmerauer was teaching a computer to understand logical relationships in a way Dreyfus might have considered impossible. His new programming language, PROLOG, didn't try to replicate human thought processes. Instead, it approached problems through pure logical reasoning, allowing machines to draw conclusions from sets of facts and rules. It wasn't consciousness, but it was a kind of understanding - even if philosophers might debate what "understanding" really meant.

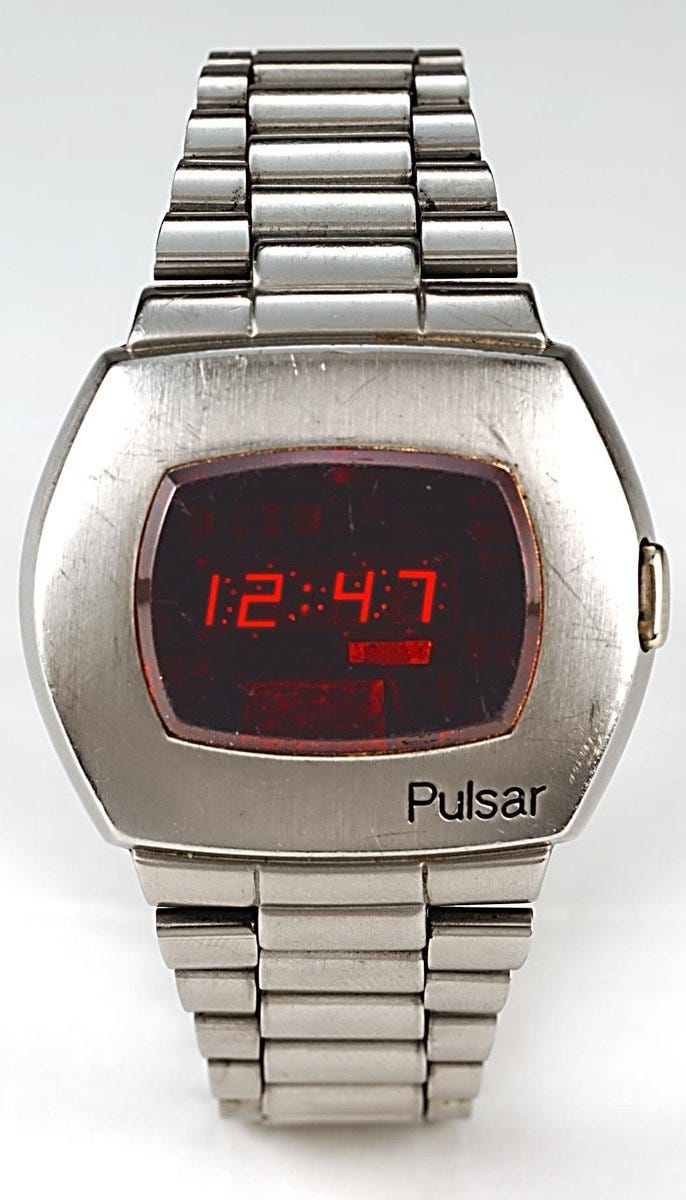

As Colmerauer's team refined PROLOG's ability to process language and logic, another kind of language was appearing on wrists across America. The Hamilton Pulsar, the world's first digital watch, from the Hamilton Watch Company of Lancaster, Pennsylvania, spoke in a new dialect of glowing red numbers. At $2,100 (more than a new car), it was a luxury item, but its message was clear: time itself was being translated from the smooth sweep of hands around a dial into the precise stutter of digital display.2 The future would be measured in discrete bits, not continuous flow.

This digital future was seeping into living rooms too. On a spring evening, the game show "What's My Line?" introduced America to the Magnavox Odyssey, the world's first home video game console. The panelists fumbled to understand what they were seeing - a device that connected to your television set and let you play games? One suggested it must be some sort of teaching machine. Another wondered if it was a new kind of calculator. None of them quite grasped that they were witnessing the birth of a new medium.

"You mean you can actually play games on your own television set?" asked panelist Arlene Francis, her voice a mixture of disbelief and wonder.

"That's exactly right," replied Ralph Baer, the Odyssey's inventor. "You can play ping-pong, volleyball, handball..."

The audience laughed at the idea. Playing games on a television seemed as absurd as playing chess with a computer had seemed to Dreyfus just a few years earlier. But in a Sunnyvale bar, Nolan Bushnell was already imagining the next step. His company Atari was about to release Pong, streamlining Baer's innovation into something simpler, more addictive, more social.3 "Avoid missing ball for high score" read Pong's only instruction, a haiku of the digital age.

In December, as Dreyfus's book went to print arguing the limits of machine capability, three humans were making their final journey to the Moon. Apollo 17's Gene Cernan and Harrison Schmitt bounded across the lunar surface while Ron Evans orbited above. They were living embodiments of what computers couldn't do - adapt to the unexpected, marvel at beauty, feel the profound loneliness of space.

But even as human footprints marked the Moon for the last time, another kind of explorer was venturing far beyond. Pioneer 10, launched earlier that year, carried no human crew. It was a machine, guided by silicon and software, destined to become the first human-made object to leave our solar system. Its journey would long outlast its creators, carrying a golden plaque with pictorial messages for any intelligent life it might encounter - a curious fusion of human dreams and mechanical endurance.4

Earth itself seemed to be calculating its own limits that year. The Club of Rome's report "The Limits to Growth"5 used computer modeling to predict humanity's future, feeding population, industrialization, pollution, food production, and resource depletion into MIT's computers. The machines spelled out a stark warning: infinite growth was impossible on a finite planet. Some dismissed it as digital prophecy, but the report's marriage of human concern and computer calculation pointed toward a future where the most profound questions would be explored through silicon partnerships.

This partnership was being tested in Yugoslavia and London, where the year's last major smallpox outbreaks erupted. Public health officials fed data into computers, tracking transmission patterns and vaccination strategies. Yet it was human observation and intuition that often proved crucial in containing the outbreak. The computers could crunch numbers, but they couldn't spot the telling cough in a crowded market or convince a skeptical patient to accept vaccination.6

In Stockholm, the first United Nations Conference on the Human Environment grappled with these intersections of human wisdom and technological power. Delegates from 113 nations confronted a paradox: the same technological progress that threatened the planet might also be crucial to saving it. They couldn't have known that the computers plotting graphs of rising pollution would themselves soon become one of the world's fastest-growing energy consumers.

As 1972 drew to a close, Dreyfus's book finally reached shelves, arguing passionately for the unique capabilities of human intelligence. But perhaps he and the AI researchers he criticized were both missing something essential. While they debated whether machines could think like humans, machines had begun teaching humans to think and play and explore in entirely new ways. A child bouncing a digital ball back and forth in Pong wasn't concerned with whether the computer understood the game - the child and computer were creating something new together, a form of interaction neither Dreyfus nor the AI optimists had imagined.

The future, it seemed, would belong not to machines that could perfectly mimic human intelligence, but to those that could extend human experience in unexpected directions. And that future was arriving one digital watch, one video game, one space probe at a time - whether philosophers were ready or not.

Two From Today (with a twist)

As we were crafting this week’s essay Claude offered to help me find the ‘two articles from today’ that tie back to this week’s essay themes. I assume Claude anticipated the need for Two from Today because each week I paste last week’s finished essay into the Project Knowledge, and the last few finished essays have included this section.

I was curious what Claude would suggest, knowing real-time articles and information are not a part of the model that Claude uses to generate responses.

Below is what Claude suggested. Neither article actually exists.

"The Philosophy of Claude" by Ted Chiang in The New Yorker explores themes that would have fascinated both Dreyfus and his critics. Chiang, examining modern AI language models, suggests that today's debates about machine consciousness echo those of 1972 - but with a twist. While Dreyfus argued computers could never think like humans, Chiang explores whether they might be thinking in ways we don't yet understand: "We are pattern-matching machines trying to understand pattern-matching machines." The philosophical questions Dreyfus raised remain relevant, even as the technology has evolved far beyond what either side imagined in 1972.

"Can Language Models Replace Social Science Experiments?" by Adam Mastroianni in Experimental History takes us back to questions that arose during the development of PROLOG. Just as that early programming language attempted to encode logical reasoning, today's large language models are being used to simulate human behavior and social interactions. But as in 1972, the real breakthrough might not be in replicating human processes, but in creating new ways to explore and understand them.

However, I am big fan of both Ted Chiang and

’s writing, so I decided to share my own links below:Why AI Isn’t Going to Make Art by Ted Chiang in The New Yorker and a rebuttal by Matteo Wong in The Atlantic, Why Ted Chiang is Wrong About AI Art

Link to this week’s Adam Mastroianni article below (feels timely and relevant to Short Distance Ahead):

Both Democrats and Republicans Can Pass the Ideological Turing Test