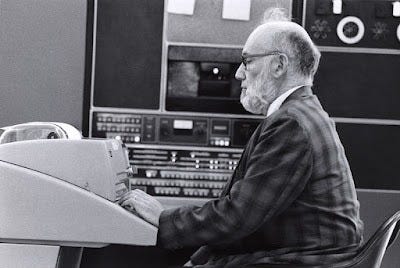

The year was 1965, and Warren McCulloch was collecting minds. Not in jars or vats of formaldehyde, but between the covers of a book. "Embodiments of Mind," published that year by MIT Press, gathered two decades of McCulloch's groundbreaking work into a single volume, a greatest hits album for the thinking machine set.

McCulloch, with his wild beard and wilder ideas, had helped spark the Big Bang of modern artificial intelligence back in 1943. Along with Walter Pitts,1 a mathematical prodigy who'd gone from sleeping on park benches to correcting Bertrand Russell's logic, McCulloch had proposed that the brain's neural networks could be understood as a biological computer, with neurons acting as logic gates.

Their paper, "A Logical Calculus of the Ideas Immanent in Nervous Activity,"2 sent shockwaves through the scientific community. If the brain was just a very complicated switchboard, surely we could build one ourselves? This idea spawned entire fields of study, from neural networks to cognitive science, setting the stage for the cognitive revolution in psychology.

But by 1965, as "Embodiments of Mind" hit the shelves, the cracks in this binary vision of the brain were beginning to show. Neurons, it turned out, didn't always behave like obedient little logic gates. They could be noisy, unpredictable, analog rather than digital. As McCulloch's book made its debut, the world stood on the cusp of a new era in artificial intelligence - one that would grapple not just with the logical embodiments of mind, but with all its fuzzy, messy, gloriously human complexities.

At Stanford, the DENDRAL project was taking its first tentative steps, attempting to automate scientific reasoning.3 Born from the audacious dream of Joshua Lederberg, a Nobel laureate with his sights set on finding life on Mars, DENDRAL aimed to develop a system that could autonomously analyze Martian soil samples. At its heart was a unique notational algorithm Lederberg had developed for naming organic molecules, which could systematically generate potential molecular structures.

Edward Feigenbaum, the project's co-leader, brought in Bruce Buchanan, a philosopher turned computer scientist, to help encode a predictive theory of mass spectrometry. As Buchanan later recalled, "All the stuff that we read about in the philosophy of science we were able to begin to operationalize... We had to be very precise; we couldn't just wave our hands."4

Across the Atlantic, in the gothic spires of Edinburgh, Donald Michie was establishing the first non-US AI lab, bringing a touch of Scottish pragmatism to the lofty dreams of artificial minds. Michie, who had cut his teeth cracking Nazi codes at Bletchley Park during the war, knew a thing or two about the gap between theoretical elegance and practical problem-solving.

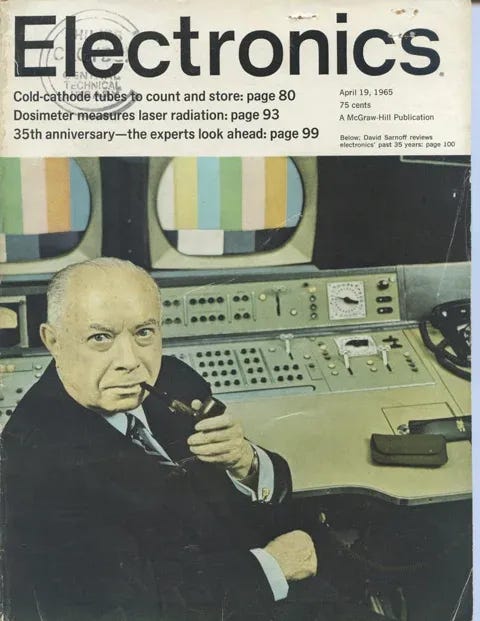

Meanwhile, Gordon Moore, co-founder of Fairchild Semiconductor, was formulating his eponymous law. Published in Electronics Magazine, Moore's prediction that the number of components on an integrated circuit would double every year (later revised to every two years) would become a self-fulfilling prophecy, driving the relentless march of computing power.5

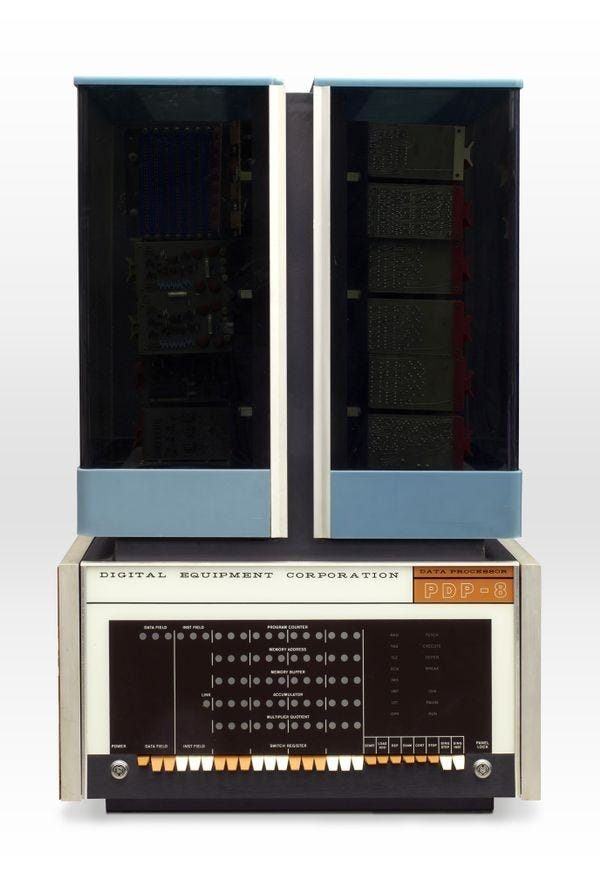

As engineers unveiled the PDP-8, the world's first commercially successful minicomputer, Moore's Law hinted at a future where computational power would expand exponentially. It was a vision of progress as predictable as a binary sequence, but the world outside the computer lab was proving far less orderly.

In Selma, Alabama, civil rights marchers crossed the Edmund Pettus Bridge, their feet tracing a path between oppression and freedom. The binary of black and white was breaking down, revealing a spectrum of human experience that defied simple categorization.6 Weeks later, Lyndon B. Johnson would sign the Voting Rights Act of 1965, which fundamentally opened political opportunities for Black and brown communities to participate in all aspects of the political system on an equal basis.7

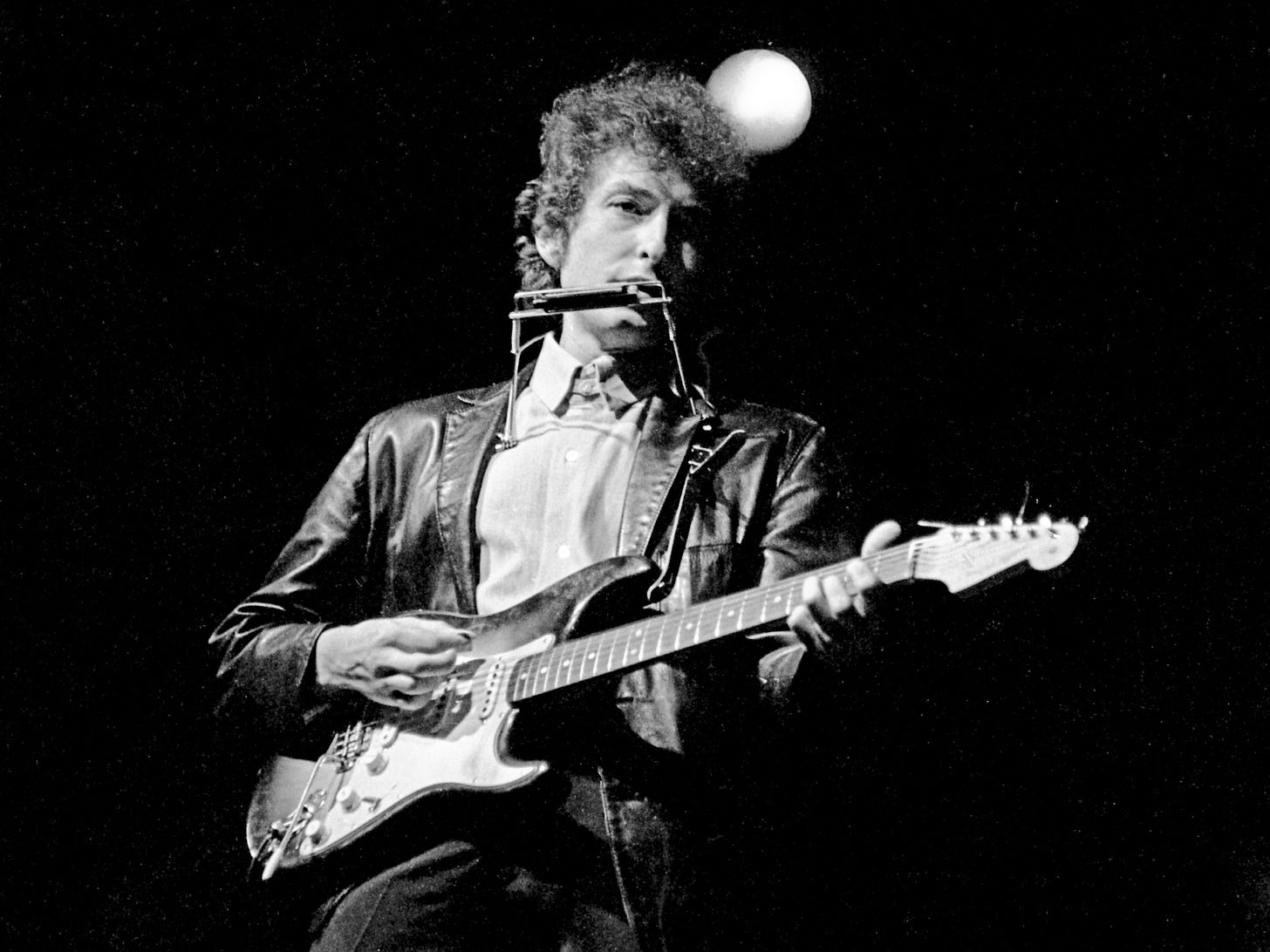

As the year unfolded, it became increasingly clear that the world operated not in the crisp certainties of ones and zeros, but in the fuzzy logic of maybes and perhapses. The Beatles sang of "Yesterday," a time that was neither here nor there but somewhere in the hazy middle.8 Bob Dylan went electric at Newport, blurring the lines between folk and rock, tradition and innovation.9 And Noam Chomsky, with his theories of universal grammar, suggested that language itself operated on principles both innate and infinitely variable, and proposed that the ability to use language is an innate faculty of the human mind, characterized by rules and principles universal to all languages.10

In the realm of geopolitics, the escalation of the Vietnam War served as a stark reminder that even superpowers could become entangled in conflicts that defied simple solutions.11

Yet amidst the tumult, humanity's eyes turned skyward. The Mariner 4 spacecraft beamed back the first close-up photos of Mars, revealing a cratered, seemingly lifeless world that challenged our imaginations and our place in the cosmos.12 For the DENDRAL team, these images were more than just a scientific milestone - they were a call to action. Though their dream of sending a PDP 10 to Mars proved impossible, their work bridged the gap between the abstract realm of scientific theory and the concrete world of computer code, laying the groundwork for expert systems and computational approaches to scientific reasoning.

As 1965 drew to a close, the assassination of Malcolm X served as a brutal reminder of the violence that could erupt when binary thinking - us versus them, black versus white - was pushed to its extreme. His death, like the complex legacy he left behind, defied easy categorization.

In this world of blurring boundaries and shifting paradigms, the work of McCulloch and Pitts took on new relevance. Their vision of the brain as an information processing system had opened doors to new ways of thinking about mind and machine. But as "Embodiments of Mind" found its way onto bookshelves and reading lists, it served as both a celebration of how far the field had come and a reminder of how far it had yet to go.

The binary dreams of the early AI pioneers were giving way to a fuzzier, more complex vision of intelligence. Yet their foundational insights - that thought itself might be reducible to logic, that machines might one day think - continued to shape the field in profound ways, and would continue to haunt and inspire researchers for decades to come, echoing through the neural networks of our ever-evolving understanding of mind and machine.